Section: New Results

Event Recognition Based on Depth Image

Participants : Kuan-Ru Lee, Carlos F. Crispim Junior, Yu-Feng Chen, François Brémond.

keywords: event recognition, depth image

Introduction

We proposed several methods to improve event recognition that are related to human and bed. Our final goal is to provide a helpful system to support the real-time observation of patients by medical personnel.

Experiments

The experiments were designed in several continuous steps. The first is the basic model by using time relative operator and two particular zones, the others were developed on this basic model. Theoretically, the performance of the accuracy will be increased step by step.

Basic model

The idea of the basic model is to combine the information of time and position to achieve the goal. Therefore, we create two particular zones to represent the area of the bed and the surrounding region of the bed.

before

M and N represent the bed and the surrounding area. The time relative operator was assigned as before. To distinguish the direction of the person, we can simplify the expression by switching the zones. However, the model was not able to detect the activity because the video was not completely recorded. Due to the ontology language that we had designed, what had been done by person will be initialized in this case, and we named these problems as "Frames Jump". On the other hand, our model was sensitive to the location of person. Extra detection will happen when the person who was standing next to bed, and just simply a step backward but not sitting on the bed. By integrating the above problems, we decided to develop a new method to replace the function that is used to distinguish the direction of the person.

Distance Analysis between the Person and the Bed

To recognize the events get-in-bed and get-out-bed, we compute the vertical distance between the person and a line of reference, which is horizontally passing from the center of the bed. To avoid the noise influencing the instance value of the distance, we used majority voting rules to represent the general direction of the moving person.

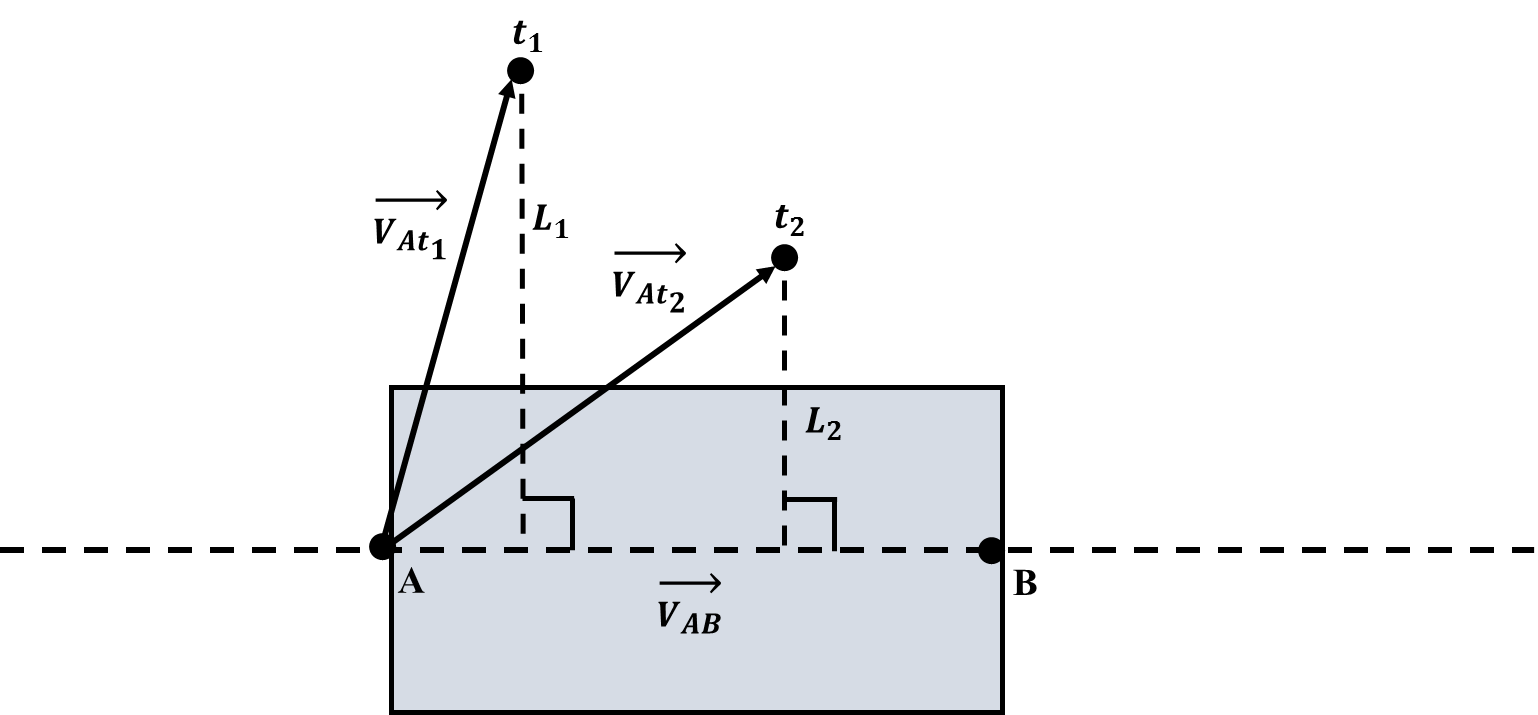

In Figure 24, the rectangle represents the zone of bed, and a horizontal line cross through the reference points A and B. The point t represents the position of the person at frame i while i 1, 2, ..., N. To project t on the reference line, the distance between t and the reference line, L, can be easily calculated. The majority voting rules can provide an average value representing the final distance.

Frame Jump

Due to the detection process, the system will start to detect the person while he or she moves. In this case, some frames may be lost when the person is still on the bed but in the getting out processes. Based on our ontology language, we designed a new model which just focused on those events which happened surrounding the bed area.

Performance

| Get in Bed | Get out Bed | |||||||||

| Method | TP | FP | FN | Pr. | Re. | TP | FP | FN | Pr. | Re. |

| A | 6 | 14 | 10 | 0.3 | 0.37 | 7 | 9 | 8 | 0.43 | 0.46 |

| B | 9 | 2 | 8 | 0.81 | 0.52 | 7 | 5 | 8 | 0.58 | 0.46 |

| B + C | 12 | 6 | 5 | 0.6 | 0.70 | 14 | 10 | 1 | 0.58 | 0.93 |

In the previous section, the basic model, which we labeled as method A, faced two problems, frame jump and extra detecting. Those problems lead high false positive and false negative. To solve the unnecessary extra detecting, we propose to analyse the distance between the person and the bed with the method B.

In table 10 we can notice the improvement of TP, FP, and FN. The FP value for method B is much lower than for method A. The new approach using the average filter and majority voting rule to eliminate the noise of movement, reduces the chance of misdetection and improves the whole performance. After we considered the solution of frame jump as method C, and combined it with method B, the number of FN reduced a lot. The increments of FP are caused by the overlap detection of both methods.